OpenThaiGPT 1.0.0 7B beta GPTQ 4 bit

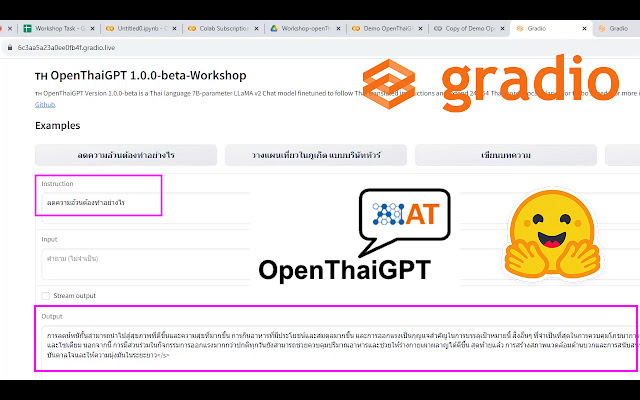

OpenThaiGPT 1.0.0 7B beta GPTQ 4 bit ทดสอบ การใช้งาน Model แบบ GPTQ แบบ 4 bit GPTQ-for-LLaMA 4 bits quantization of LLaMA using GPTQ GPTQ is SOTA one-shot weight quantization method This code is based on GPTQ There is a pytorch branch that allows you to use groupsize and act-order together. Original Model ( FP16 ) https://huggingface.co/Adun/openthaigpt-1.0.0-beta-7b-ckpt-hf GPTQ 4 bit Model https://huggingface.co/Adun/openthaigpt-1.0.0-7b-chat-beta-gptq-4bit Demo Code Text generation web UI A Gradio web UI for Large Language Models. Setting Parameters Test on Hardware CPU : Intel i9-7900 3.3GHz GPU : NVIDIA RTX-2060 6GB Software OS : Ubuntu 20.04.6 LTS Youtube Demo ChatBot Q&A Run Demo code Reference GPTQ-for-LLaMa https://github.com/amphancm/GPTQ-for-LLaMa OpenThaiGPT https://openthaigpt.aieat.or.th/