LLAMA-CPP-PYTHON on RTX4060 GPU

LLAMA-CPP-PYTHON on NVIDIA RTX4060 GPU

We try to use llama-cpp-python library with many OS.

Windows 11

Ubuntu 22.04

and Colab ( ubuntu 22.04 also )

Llama-cpp-python library with RTX4060 GPU on Windows11

Install NVDIA GPU Driver.

we use driver version 537.24

then check nvidia-smi command for check your GPU.

Install CUDA Toolkit. https://developer.nvidia.com/cuda-downloads

we use CUDA 12.1 version

We use Python 3.11.7

check python and pip with command line.

Install Pytorch https://pytorch.org/get-started/locally/

check pip list and check cuda available on your GPU with python shell.

if false it cannot use with NVIDIA GPU. find your problem.

Install llama-cpp-python https://pypi.org/project/llama-cpp-python/

with CUDA 12.1 use this command.

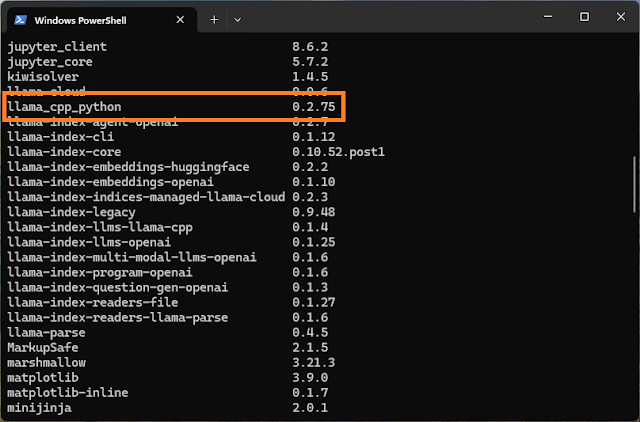

on Jul 2,2024 version 0.2.81 but we use only 0.2.75 it's work fine. ( 0.2.81 not test on Windows11 yet )

pip install llama-cpp-python \

--extra-index-url https://abetlen.github.io/llama-cpp-python/whl/cu121pip list your library.

How to check your llama-cpp-python lib work with CUDA?

you use LLM code and load your LLM model to test llama-cpp-python lib.

my code test on jupyter notebook in VS code.

we try to load Llama3-Typhoon1.5-8b 4bit GGUF format from Hugging Face scb10x/llama-3-typhoon-v1.5-8b-instruct

Now you can use llama-cpp-python library on your windows11.

Llama-cpp-python library with RTX4060 GPU on Ubuntu 22.04.03 LTS

Install NVDIA GPU Driver.

we use Nvidia-driver version 535

Install Pytorch https://pytorch.org/get-started/locally/

check pip list and check cuda available on your GPU with python shell.

if false it cannot use with NVIDIA GPU. find your problem.

Install llama-cpp-python https://pypi.org/project/llama-cpp-python/

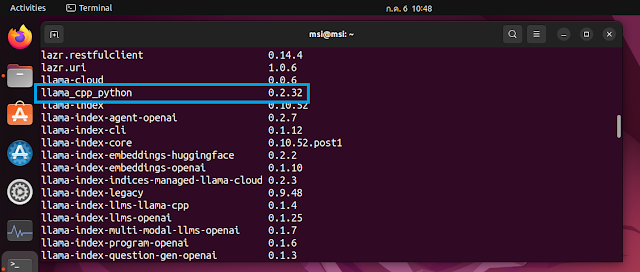

on Jul 2,2024 version 0.2.81 but we use only 0.2.32 it's work fine. ( 0.2.81 not test on Ubuntu yet )

Install pip CommandCMAKE_ARGS="-DLLAMA_CUDA=on" pip install llama-cpp-python==0.2.32How to check your llama-cpp-python lib work with CUDA?

you use LLM code and load your LLM model to test llama-cpp-python lib.

my code test on jupyter notebook in VS code.

we try to load Llama3-Typhoon1.5-8b 4bit GGUF format from Hugging Face scb10x/llama-3-typhoon-v1.5-8b-instruct

ความคิดเห็น

แสดงความคิดเห็น