Llama3 Typhoon v1.5 (scb10x) LLM

Llama3 Typhoon v1.5 (scb10x) LLM

Typhoon-1.5 models come in 8B and 72B sizes

These models are built on the 8B Llama3 and 72B Qwen base models, 8B weights released under the Meta Llama 3 Community License; 72B weights released under the Tongyi Qianwen License

Performance

To gain insight into Typhoon’s performance, we evaluated it using multiple-choice exam:

Language & Knowledge Capabilities: We assessed Typhoon on multiple-choice question answering datasets, including ThaiExam, M3Exam, and MMLU. The ThaiExam dataset was sourced from standard examinations in Thailand, including ONET, TGAT, TPAT, and A-Level. M3Exam is a benchmark for Southeast Asian countries, including Thailand. MMLU is a standard benchmark for language models in English.

Typhoon-1.5X is an eXperimental model designed for application use cases, featuring improved capabilities in Retrieval-Augmented Generation (RAG), constrained generation, and reasoning in order to achieve competitive performance in instruction following and better human alignment in Thai.

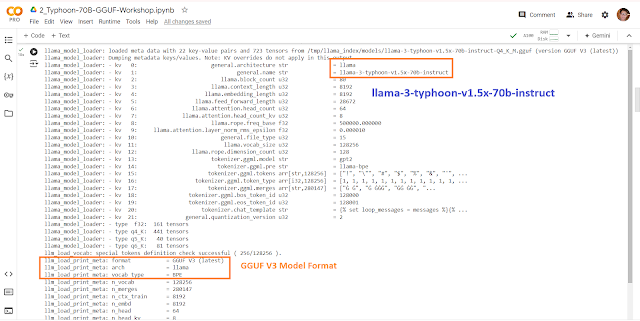

Llama3-Typhoon v1.5x 70B 4bit GGUF Demo on Colab

https://huggingface.co/Adun/llama-3-typhoon-v1.5x-70b-instruct-gguf

So, We cannot load all 81 layers. Then We set model_kwargs={"n_gpu_layers": 70}.

Inference Model

Install llama-cpp-python

Typhoon API

Access the Typhoon Instruction Tuned model through our new API service.Available for free during our open beta.

Reference

Typhoon 1.5 Release https://blog.opentyphoon.ai/typhoon-1-5-release-a9364cb8e8d7

ความคิดเห็น

แสดงความคิดเห็น